Power of Prompt Engineering : Unlocking the Full Potential

As Artificial Intelligence (AI) reshapes industries, one emerging discipline is proving vital to unlocking its true power: prompt engineering.

While tools like GPT-4, Claude, and Gemini are widely known for their capabilities, few realize that their effectiveness hinges on how you interact with them. Prompt engineering isn’t just about giving instructions—it’s about speaking the language of AI fluently and deliberately. Done right, it turns these models from general-purpose assistants into highly focused, task-specific assets.

What Is Prompt Engineering?

Prompt engineering is the art and science of designing and optimizing prompts to guide AI models, particularly LLMs, towards generating the desired responses. By carefully crafting prompts, you provide the model with context, instructions, and examples that help it understand your intent and respond in a meaningful way. Think of it as providing a roadmap for the AI, steering it towards the specific output you have in mind.

In simple terms, a prompt is the text you provide to an AI to trigger a response. But crafting a good prompt is both an art and a science. The right prompt considers context, tone, constraints, format, and desired outcomes—all while guiding the model away from ambiguity and toward clarity.

For example:

- Asking “Write about climate change” may result in a generic paragraph.

- But asking “Write a 3-paragraph blog introduction on climate change’s impact on coastal cities, using a professional tone and citing two examples” will yield a more targeted, usable result.

Prompt engineering bridges the gap between raw AI potential and precise business application.

Why Prompt Engineering Matters

LLMs are trained on vast datasets, but they don’t know your specific goals. They predict likely continuations based on the prompt—but that means poorly written prompts can result in vague or irrelevant output.

Prompt engineering solves this by:

- Improving Accuracy: The clearer the input, the sharper the response. You can avoid hallucinations, off-topic answers, and missed nuances.

- Controlling Output Style: Whether you need a formal tone, bullet points, JSON structure, or code snippets, prompts help shape the output.

- Optimizing Efficiency: A well-engineered prompt reduces the need for multiple iterations, saving time and compute cost.

- Enabling Complex Use Cases: From multi-step reasoning to persona-based responses, prompt workflows allow AI to perform in layered and nuanced scenarios.

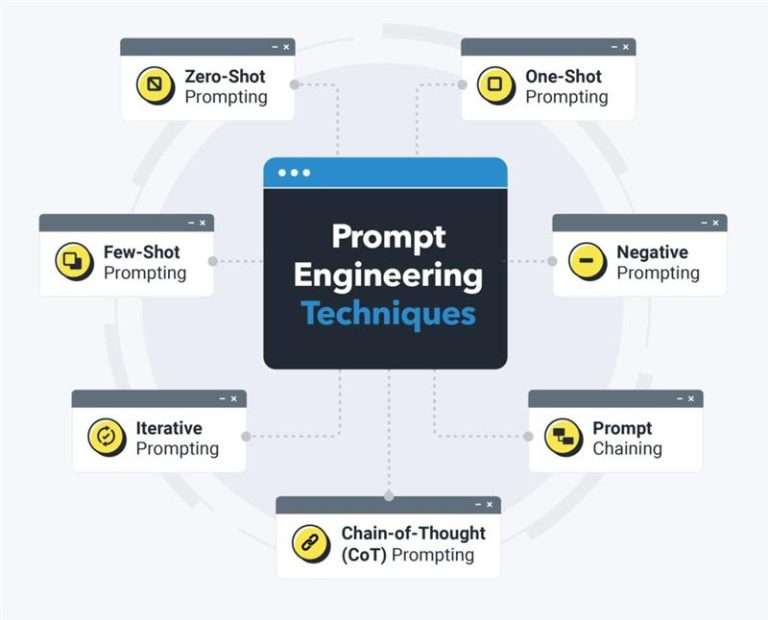

Common Techniques in Prompt Engineering

Prompt engineering has evolved from trial-and-error into a structured process. Here are some widely used techniques:

1. Few-shot and Zero-shot Prompting

Provide examples (few-shot) or clear instructions (zero-shot) to help the model understand the task pattern. This improves consistency, especially in repetitive tasks.

2. Chain-of-Thought Prompting

Encourage the model to show its reasoning by prompting it to “think step-by-step.” This is especially useful in logic-heavy or analytical tasks.

3. Instruction Tuning Prompts

LLMs trained to follow instructions perform better when given specific tasks, such as: “Summarize this contract in two paragraphs, highlighting any risk clauses.”

4. Role-based Prompting

By assigning the model a persona like “You are a financial advisor” or “You are a legal analyst,” prompts can be tailored to specific tones, expectations, and formats.

Best Practices in Prompt Engineering

- Be Specific and Detailed: The more precise you are, the better the AI can meet your expectations. Instead of “tell me about the Renaissance,” try “provide a summary of the key political changes during the Renaissance in Florence.”

- Use Examples: Including examples within your prompt can guide the model’s output. For instance, “write a blog post introduction like the one in ‘Example Blog Title’ about AI advancements.”

- Leverage Role-playing: Asking the AI to assume a role can shape the tone and style of the response. “Imagine you’re a science teacher explaining quantum computing to a high school student.”

- Iterative Refinement: Don’t hesitate to refine your prompt based on the output. This process can help hone in on exactly what you’re looking for.

- Creative Constraints: Setting boundaries can inspire creativity. “Write a short story involving time travel, a lost key, and a mystery, in less than 300 words.”

Examples of Effective Prompt Engineering

- Before: “Make a list.”

- After: “Create a detailed checklist for organizing a successful virtual tech conference, including technology requirements and participant engagement strategies.”

or

- Before: “Explain machine learning.”

- After: “Describe machine learning to a 10-year-old, using simple examples and avoiding technical jargon.”

The Future of Prompt Engineering

As LLMs become more integrated into workflows, prompt engineering will evolve from a niche skill into a core competency. Organizations are already building prompt libraries—collections of proven prompt templates for various use cases.

In the future, we’ll likely see:

- Prompt versioning and testing tools.

- A/B testing of prompt variants for optimization.

- Prompt marketplaces offering pre-built solutions.

- Automated prompt-tuning systems based on user feedback and performance.

Prompt engineering is quickly becoming the “UI/UX of AI.” Just as no app thrives without good design, no AI thrives without good prompting.

At TechRover™ Solutions, we specialize in designing intelligent AI systems powered by expert-level prompt engineering. Our team works closely with clients across industries to develop high-performing, reliable, and purpose-driven AI integrations.

Prompt engineering is less about mastering a complex technical skill and more about learning to communicate effectively with AI.

Whether you’re exploring AI for the first time or refining an existing system, we help you go beyond experimentation—toward real results.

✅ Build reusable prompt workflows

✅ Reduce output errors and inconsistencies

✅ Tailor AI to your tone, domain, and compliance needs

✅ Integrate seamlessly into your product or platform

Let’s make your AI work smarter, not harder—one prompt at a time.